AnatomyNow

June 2019 - July 2019

Introduction:

A team of Computer Science students at Duy Tan university have been developing a program called AnatomyNow. The program allows you to examine accurate 3D models of human anatomy. Although the program is very impressive it is unwieldy and difficult to use. A small team of Oswego students collaborate with the Duy Tan team to help design a new interface for the program.

The AnatomyNow program consisted of two main screens: the Home screen and the 3D screen. The Home page was to select which organ system you wanted to examine. The 3D screen is where you would examine 3D modals of organs in great detail.

.png)

Screenshot of Home Screen

Screenshot of 3D Screen

The Problem:

The AnatomyNow software is very powerful but unwieldy and confusing to use. It is difficult to navigate and operate

The Goal:

Redesign the interface of the program so it is more user friendly. The redesign must contain all the features of the old design and be easier to use and navigate, and less prone to user error.

My Role:

This project was lead by me and another graduate student. We also had an undergraduate student assisting us and were under the guidance of a professor.

Limitations:

This project was completed during our stay in Vietnam. So we had roughly a 2 month time period to start and finish it. Since we were in a foreign country there was strong language barrier that limited the amount of participants we could find.

Cognitive Walkthrough

At the beginning of this project none of the team had any experience with the AnatomyNow software. To assess the software we decided to conduct a cognitive walkthrough. We had 3 users who had never used the program before go through the software. These users were given a few tasks to complete; these tasks were based on the main use cases of the software.

The tasks we asked participants to complete were:

1. Open the skeletal system in 3D view.

2. Locate the Humerus bone and read the description.

3. Return to the main screen and view the Skull in 3D view.

4. Look through the top of the Skull.

5. Return the model to its original state.

6. Add the four front teeth into the pinned list.

7. Return to the main screen.

8. View the internal components of the brain together in full screen. (Only the internal components)

9. Return to the main screen and view the animated simulation of the heart.

10. Exit the program.

While participants were using the program we also observed them to see how they navigated through the program and what they struggled with. We also recorded their walkthroughs and took notes during of our observations.

After everyone completed the Cognitive Walkthroughs we went over our notes and looked for common problems that everyone seemed to have while using the program. These problems were compiled into list from highest to lowest priority.

Problem List:

-

Buttons are not labeled or poorly labeled. This leads to a lot of confusion about their purpose and what they do. Everyone had a trial and error method to figure out what the buttons do

-

No instructions for moving / interacting with the 3D models. Figuring out the controls for interacting with the model was also done with a trial and error method

-

No warning for options that had a bigger impact; exiting out of the program was a 1-click button that everyone accidently did

-

Controls for the menu were inconsistent; certain menu options only took 1-click to do while other options took 2-clicks. This inconsistency made menu navigation take extra

-

Arrange of buttons feels somewhat random, buttons with different functions are placed near each other confusing the purpose of each button

-

Several smaller technical errors such as not all word changing when switch language options or the search bar not remembering previous searches

While the Cognitive Walkthroughs were being run a different part of the team created users personas. The personas we created were of a medical doctor who could use the program to explain medical problem to their patient or nurses, and of a professor who might use the program to help with his lectures.

Professor Persona

Doctor Persona

Redesign

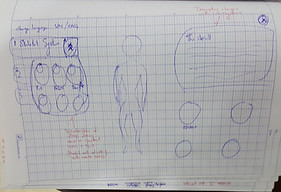

After the Cognitive Walkthroughs were completed and we had a better understanding of the pain points of the program we started working on the redesign. Every member of the team created a rough draft sketch of a redesign. Once everyone made a sketch we came together to bounce ideas off each other, keep the ideas we liked from each sketch and combine the best parts of them together to make a redesign.

Design Sketches from everyone on the team

For this redesign we kept in mind the problems of the original design: that it lacked clarity of what buttons did, it gave little feedback or warning to users causing confusion, and that parts of the menu we unintuitive to use.

To address these problems we gave the buttons better labels so it was clearer of their purpose. Buttons were also grouped together based on the functions they had; similar functions were grouped together while different functions were in separate groups. Buttons that caused more impactful changes were given warnings before going through with the changes they made.

_PNG.png)

Redesign's Home Page

_PNG.png)

Redesign's 3D Page

With this redesign complete we create a prototype of it that could mimic the functions of main program. The prototype redesign was capable of completing all the tasks from the Cognitive walkthroughs

User Testing

After our Redesign prototype was completed we had to put it to the test to see if it was better than the original design. To see if it was an improvement or not we used an A/B testing method to compare both designs.

For the A/B testing we conducted it in a similar manner to the Cognitive Walkthroughs. We gave participants either the redesign prototype or the Original design prototype and asked them to complete a few tasks within the prototype. Participants were monitored while using the prototypes and only given help if they became stuck and unable to proceed forward. The students conducting the tests also took notes of how the participants used the prototypes and other observations they had.

Pictures from our user testing day

After completing the main tasks in the testing participants were asked to complete a questionnaire based on their experience. The questionnaire consisted of questions from the System Usability Score (a standardized survey to measure the overall usability of product), and a rating scale of how difficult each tasks was, and lastly a few demographic questions about them.

Because we were in a foreign country with not many English speakers, and on a time limit we only had limited amount of test subjects. Lucky we were able to set up testing in a local restaurant to get a few locals and some tourist to participate in our testing. We were only able to get 13 participates to test our prototypes but it was enough to get some definitive results.

Observation Notes from user testing, we made a template to for recording notes

A pictures of us and many generous user testers

Results

From our A/B testing we were able to directly compare the original design to the redesign. We only 13 participants in total due to time restraints and also because were in Vietnam and it was hard to find English speakers there. For testing we had 6 participants use the original design, and 7 participants to use the redesign.

System Usability Score:

The System Usability Score (SUS) gives an overall rating of usability for a system. The SUS would give a score out of 100 with a higher score indicating better usability. All the participant's scores for both groups were averaged together to create a score for each design.

By comparing the scores of the two groups, we can see that the average score for the redesign interface was 19.7 points higher than the average score for the original interface. This result shows that the redesign interface had significantly higher overall usability compared to the original interface, and that the redesign was successful in increasing the usability of the interface.

Task Difficulty Rating:

During the testing we asked all participants to complete 5 tasks using either original design or the Redesign. After completing the tasks and the SUS questions we asked each participants to rate the difficulty of each task. The ratings were on a 1 - 5 scale, with 1 being very easy and 5 being very hard. The scores from each group were averaged together for each task.

.png)

The results show that the tasks were easier to complete on the redesign; as 4 out the 5 tasks had a lower score. Task 1 and 2 had a great difference in difficulty rating. Task 4 was rated as easier on the original so there is still room for improvement in the redesign.

Conclusion

From our testing results we were able to prove that our redesign was more user friendly than the original design by a significant margin. The redesign scored a better SUS scores and was rated as easier to use. Although the redesign was successful there is still room for improvement as certain aspects of it weren't better than the original design. For having a limited time frame and working in a foreign country I think our efforts were fruitful.

Although this project was completed a long time ago the prototypes are up is you want to play with them:

Link to Original Design:

Link to Resign Design: